In the first post of this series we showed how to use the embedded secure element interface Android 4.x offers. Next, we used some GlobalPlatform commands to find out more about the SE execution environment in the Galaxy Nexus. We also showed that there is currently no way for third parties to install applets on the SE. Since installing our own applets is not an option, we will now find some pre-installed applets to explore. Currently the only generally available Android application that is known to install applets on the SE is Google's own Google Wallet. In this last post, we'll say a few words about how it works and then try to find out what publicly available information its applets host.

The controller applet securely stores Google Wallet state and event log data, but most importantly, it enables or disables contactless payment functionality when you unlock the Wallet app by entering your PIN. The latest version seems to have the ability to store and verify a PIN securely (inside the SE), however it does not appear it is actually used by the app yet, since the Wallet Cracker can still recover the PIN on a rooted phone. This implies that the PIN hash is still stored in the app's local database.

The MIFARE manager applet works in conjunction with the offers and reward/loyalty cards features of Wallet. When you save an offer or add a loyalty card, the MIFARE manager applet will write block(s) to the emulated MIFARE 4K Classic card to mirror the offer or card on the SE, letting you redeem it by tapping your phone at a NFC-enabled POS terminal. It also keeps an application directory (similar to the standard MIFARE MAD) in the last sectors, which is updated each time you add or remove a card. The emulated MIFARE card uses custom sector protection keys, which are most probably initialized during the initial provisioning process. Therefore you cannot currently read the contents of the MIFARE card with an external reader. However, the encryption and authentication scheme used by MIFARE Classic has been broken and proven insecure, and the keys can be recovered easily with readily available tools. It would be interesting to see if the emulated card is susceptible to the same attacks.

Finally, there should be one or more EMV-compatible payment applets that enable you to pay with your phone at compatible POS terminals. EMV is an interoperability standard for payments using chip cards, and while each credit card company has their proprietary extensions, the common specifications are publicly available. The EMV standard specifies how to find out what payment applications are installed on a contactless card, and we will use that information to explore Google Wallet further later.

Armed with that basic information we can now extend our program to check if Google Wallet applets are installed. Google Wallet has been around for a while, so by now the controller and MIFARE manager applets' AIDs are widely known. However, we don't need to look further than latest AOSP code, since the system NFC service has those hardcoded. This clearly shows that while SE access code is being gradually made more open, its main purpose for now is to support Google Wallet. The controller AID is

The 'File Control Information' and 'Dedicated File' names are file system-based card legacy terms, but the DF (equivalent to a directory) is the AID of the controller applet (which we already know), and the last piece of data is something new. Two bytes looks very much like a short value, and if we convert this to decimal we get '258', which happens to be the controller applet version displayed in the 'About' screen of the current Wallet app ('v258').

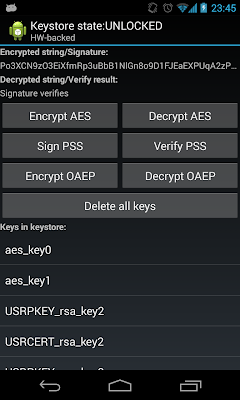

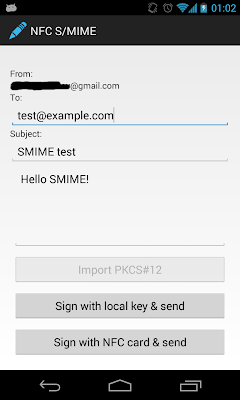

Now that we have an app that can check for wallet applets (see sample code, screenshot above), we can verify if those are indeed managed by the Wallet app. It has a 'Reset Wallet' action on the Settings screen, which claims to delete 'payment information, card data and transaction history', but how does it affect the controller applets? Trying to select them after resetting Wallet shows that the controller applet has been removed, while the MIFARE manager applet is still selectable. We can assume that any payment applets have also been removed, but we still have no way to check. This leads us to the topic of our next section:

This was fun, but it doesn't really show much besides the fact that Google Wallet's virtual card(s) comply with the EMV specifications. What is more interesting is that the controller applet APDU commands that toggle contactless payment and modify the PPSE don't require additional application authentication and can be issued by any app that is whitelisted to use the secure element. The controller applet most probably doesn't store any really sensitive information, but while it allows its state to be modified by third party applications, we are unlikely to see any other app besides Google Wallet whitelsited on production devices. Unless of course more fine-grained SE access control is implemented in Android.

Because for most applications the SE is used in conjunction with NFC, and SE app needs to be notified of relevant NFC events such as RF field detection or applet selection via the NFC interface. Disclosure of such events to malicious applications can also potentially lead to denial of service attacks, that is why access to them needs to be controlled as well. The GP SE access control specification allows rules for controlling access to NFC events to be managed along with applet access rules by saving them on the SE. In Android, global events are implemented by using broadcasts and interested applications can create and register a broadcast receiver component that will receive such broadcasts. Broadcast access can be controlled with standard Android signature-based permissions, but that has the disadvantage that only apps signed with the system certificate would be able to receive NFC events, effectively limiting SE apps to those created by the device manufacturer or MNO. Android 4.x therefore uses the same mechanism employed to control SE access -- whitelisting application certificates. Any application registered in

Google Wallet and the SE

To quote the Google Play description, 'Google Wallet holds your credit and debit cards, offers, and rewards cards'. How does it do this in practice though? The short answer: it's slightly complicated. The longer answer: only Google knows all the details, but we can observe a few things. After you install the Google Wallet app on your phone and select an account to use with it, it will contact the online Google Wallet service (previously known as Google Checkout), create or verify your account and then provision your phone. The provisioning process will, among other things, use First Data's Trusted Service Manager (TSM) infrastructure to download, install and personalize a bunch of applets on your phone. This is all done via the Card Manager and the payload of the commands is, of course, encrypted. However, the GP Secure Channel only encrypts the data part of APDUs, so it is fairly easy to map the install sequence on a device modified to log all SE communication. There are three types of applets installed: a Wallet controller applet, a MIFARE manager applet, and of course payment applets that enable your phone to interact with NFC-enabled PayPass terminals.The controller applet securely stores Google Wallet state and event log data, but most importantly, it enables or disables contactless payment functionality when you unlock the Wallet app by entering your PIN. The latest version seems to have the ability to store and verify a PIN securely (inside the SE), however it does not appear it is actually used by the app yet, since the Wallet Cracker can still recover the PIN on a rooted phone. This implies that the PIN hash is still stored in the app's local database.

The MIFARE manager applet works in conjunction with the offers and reward/loyalty cards features of Wallet. When you save an offer or add a loyalty card, the MIFARE manager applet will write block(s) to the emulated MIFARE 4K Classic card to mirror the offer or card on the SE, letting you redeem it by tapping your phone at a NFC-enabled POS terminal. It also keeps an application directory (similar to the standard MIFARE MAD) in the last sectors, which is updated each time you add or remove a card. The emulated MIFARE card uses custom sector protection keys, which are most probably initialized during the initial provisioning process. Therefore you cannot currently read the contents of the MIFARE card with an external reader. However, the encryption and authentication scheme used by MIFARE Classic has been broken and proven insecure, and the keys can be recovered easily with readily available tools. It would be interesting to see if the emulated card is susceptible to the same attacks.

Finally, there should be one or more EMV-compatible payment applets that enable you to pay with your phone at compatible POS terminals. EMV is an interoperability standard for payments using chip cards, and while each credit card company has their proprietary extensions, the common specifications are publicly available. The EMV standard specifies how to find out what payment applications are installed on a contactless card, and we will use that information to explore Google Wallet further later.

Armed with that basic information we can now extend our program to check if Google Wallet applets are installed. Google Wallet has been around for a while, so by now the controller and MIFARE manager applets' AIDs are widely known. However, we don't need to look further than latest AOSP code, since the system NFC service has those hardcoded. This clearly shows that while SE access code is being gradually made more open, its main purpose for now is to support Google Wallet. The controller AID is

A0000004762010 and the MIFARE manager AID is A0000004763030. As you can see, they start with the same prefix (A000000476), which we can assume is the Google RID (there doesn't appear to be a public RID registry). Next step is, of course, trying to select those. The MIFARE manager applet responds with a boring 0x9000 status which only shows that it's indeed there, but selecting the controller applet returns something more interesting:6f 0f -- File Control Information (FCI) Template

84 07 -- Dedicated File (DF) Name

a0 00 00 04 76 20 10 (BINARY)

a5 04 -- File Control Information (FCI) Proprietary Template

80 02 -- Response Message Template Format 1

01 02 (BINARY)

The 'File Control Information' and 'Dedicated File' names are file system-based card legacy terms, but the DF (equivalent to a directory) is the AID of the controller applet (which we already know), and the last piece of data is something new. Two bytes looks very much like a short value, and if we convert this to decimal we get '258', which happens to be the controller applet version displayed in the 'About' screen of the current Wallet app ('v258').

Now that we have an app that can check for wallet applets (see sample code, screenshot above), we can verify if those are indeed managed by the Wallet app. It has a 'Reset Wallet' action on the Settings screen, which claims to delete 'payment information, card data and transaction history', but how does it affect the controller applets? Trying to select them after resetting Wallet shows that the controller applet has been removed, while the MIFARE manager applet is still selectable. We can assume that any payment applets have also been removed, but we still have no way to check. This leads us to the topic of our next section:

Exploring Google Wallet EMV applets

Google Wallet is compatible with PayPass terminals, and as such should follow relevant specifications. For contactless cards those are defined in the EMV Contactless Specifications for Payment Systems series of 'books'. Book A defines the overall architecture, Book B -- how to find and select a payment application, Book C -- the rules of the actual transaction processing for each 'kernel' (card company-specific processing rules), and Book D -- the underlying contactless communication protocol. We want to find out what payment applets are installed by Google Wallet, so we are most interested in Book B and the relevant parts of Book C.

Credit cards can host multiple payment applications, for example for domestic and international payment. Naturally, not all POS terminals know of or are compatible with all applications, so cards keep a public EMV app registry at a well known location. This practice is optional for contact cards, but is mandatory for contactless cards. The application is called 'Proximity Payment System Environment' (PPSE) and selecting it will be our first step. The application's AID is derived from the name: '2PAY.SYS.DDF01', which translates to

We have initialized the $10 prepaid card available when first installing Wallet, so something must be there. We know that the controller applet manages payment state, so after starting up and unlocking Wallet we finally get more interesting results (shown parsed and with some bits masked below). It turns out that locking the Wallet up effectively hides payment applications by deleting them from the PPSE. This, in addition to the fact that card emulation is available only when the phone's screen is on, provides better card security than physical contactless cards, some of which can easily be read by simply using a NFC-equipped mobile phone, as has been demonstrated.

One of the applications is the well known MasterCard credit or debit application, and there is another MasterCard app with a longer AID and higher priority (1, the highest). The recently announced update to Google Wallet allows you to link practically any card to your Wallet account, but transactions are processed by a single 'virtual' MasterCard and then billed back to your actual credit card(s). It is our guess that the first application in the list above represents this virtual card. The next step in the EMV transaction flow is selecting the preferred payment app, but here we hit a snag: selecting each of the apps always fails with the

Most open-source tools for card analysis, such as cardpeek and Java EMV Reader were initially developed for contact cards, and therefore need a connection to a PC/SC-compliant reader to operate. If you have a dual interface reader that provides PC/SC drivers you get this for free, but for a standalone NFC reader we need libnfc, ifdnfc and PCSC lite to complete the PC/SC stack on Linux. Getting those to play nicely together can be a bit tricky, but once it's done card tools work seamlessly. Fortunately, selection via the NFC interface is successful and we can proceed with the next steps in the EMV flow: initiating processing by sending the

As you can see, it does not include the card holder name, but all the other information is available, as per the EMV standard. We even get the 'transaction in progress' animation on screen while our reader is communicating with Google Wallet. We can also get the PIN try counter (set to 0, in this case meaning disabled), and a transaction log in the format shown below. We can't verify if the transaction log is used though, since Google Wallet, like a lot of the newer Google services, happens to be limited to the US .

'325041592E5359532E444446303131' in hex. Upon successful selection it returns a TLV data structure that contains the AIDs, labels and priority indicators of available applications (see Book B, 3.3.1 PPSE Data for Application Selection). To process it, we will use and slightly extend the Java EMV Reader library, which does similar processing for contact cards. The library uses the standard Java Smart Card I/O API to communicate with cards, but as we pointed out in the first article, this API is not available on Android. Card communication interfaces are nicely abstracted, so we only need to implement them using Android's native NfcExecutionEnvironment. The main classes we need are SETerminal, which creates a connection to the card, SEConnection to handle the actual APDU exchange, and SECardResponse to parse the card response into status word and data bytes. As an added bonus, this takes care of encapsulating our uglish reflected code. We also create a PPSE class to parse the PPSE selection response into its components. With all those in place all we need to do is follow the EMV specification. Selecting the PPSE with the following command works at first try, but produces a response with 0 applications:--> 00A404000E325041592E5359532E4444463031

<-- 6F10840E325041592E5359532E4444463031 9000

response hex :

6f 10 84 0e 32 50 41 59 2e 53 59 53 2e 44 44 46

30 31

response SW1SW2 : 90 00 (Success)

response ascii : o...2PAY.SYS.DDF01

response parsed :

6f 10 -- File Control Information (FCI) Template

84 0e -- Dedicated File (DF) Name

32 50 41 59 2e 53 59 53 2e 44 44 46 30 31 (BINARY)

We have initialized the $10 prepaid card available when first installing Wallet, so something must be there. We know that the controller applet manages payment state, so after starting up and unlocking Wallet we finally get more interesting results (shown parsed and with some bits masked below). It turns out that locking the Wallet up effectively hides payment applications by deleting them from the PPSE. This, in addition to the fact that card emulation is available only when the phone's screen is on, provides better card security than physical contactless cards, some of which can easily be read by simply using a NFC-equipped mobile phone, as has been demonstrated.

Applications (2 found):

Application

AID: a0 00 00 00 04 10 10 AA XX XX XX XX XX XX XX XX

RID: a0 00 00 00 04 (Mastercard International [US])

PIX: 10 10 AA XX XX XX XX XX XX XX XX

Application Priority Indicator

Application may be selected without confirmation of cardholder

Selection Priority: 1 (1 is highest)

Application

AID: a0 00 00 00 04 10 10

RID: a0 00 00 00 04 (Mastercard International [US])

PIX: 10 10

Application Priority Indicator

Application may be selected without confirmation of cardholder

Selection Priority: 2 (1 is highest)

One of the applications is the well known MasterCard credit or debit application, and there is another MasterCard app with a longer AID and higher priority (1, the highest). The recently announced update to Google Wallet allows you to link practically any card to your Wallet account, but transactions are processed by a single 'virtual' MasterCard and then billed back to your actual credit card(s). It is our guess that the first application in the list above represents this virtual card. The next step in the EMV transaction flow is selecting the preferred payment app, but here we hit a snag: selecting each of the apps always fails with the

0x6999 ('Applet selection failed') status. It has been reported that this was possible in previous versions of Google Wallet, but has been blocked to prevent relay attacks and stop Android apps from extracting credit card information from the SE. This leaves us with using the NFC interface if we want to find out more.Most open-source tools for card analysis, such as cardpeek and Java EMV Reader were initially developed for contact cards, and therefore need a connection to a PC/SC-compliant reader to operate. If you have a dual interface reader that provides PC/SC drivers you get this for free, but for a standalone NFC reader we need libnfc, ifdnfc and PCSC lite to complete the PC/SC stack on Linux. Getting those to play nicely together can be a bit tricky, but once it's done card tools work seamlessly. Fortunately, selection via the NFC interface is successful and we can proceed with the next steps in the EMV flow: initiating processing by sending the

GET PROCESSING OPTIONS and reading relevant application data using the READ RECORD command. For compatibility reasons, EMV payment applications contain data equivalent to that found on the magnetic stripe of physical cards. This includes account number (PAN), expiry date, service code and card holder name. EMV-compatible POS terminals are required to support transactions based on this data only ('Mag-stripe mode'), so some of it could be available on Google Wallet as well. Executing the needed READ RECORD commands shows that it is indeed found on the SE, and both MasterCard applications are linked to the same mag-stripe data. The data is as usual in TLV format, and relevant tags and format are defined in EMV Book C-2. When parsed it looks like this for the Google prepaid card (slightly masked):Track 2 Equivalent Data:

Primary Account Number (PAN) - 5430320XXXXXXXX0

Major Industry Identifier = 5 (Banking and financial)

Issuer Identifier Number: 543032 (Mastercard, UNITED STATES OF AMERICA)

Account Number: XXXXXXXX

Check Digit: 0 (Valid)

Expiration Date: Sun Apr 30 00:00:00 GMT+09:00 2017

Service Code - 101:

1 : Interchange Rule - International interchange OK

0 : Authorisation Processing - Normal

1 : Range of Services - No restrictions

Discretionary Data: 0060000000000

As you can see, it does not include the card holder name, but all the other information is available, as per the EMV standard. We even get the 'transaction in progress' animation on screen while our reader is communicating with Google Wallet. We can also get the PIN try counter (set to 0, in this case meaning disabled), and a transaction log in the format shown below. We can't verify if the transaction log is used though, since Google Wallet, like a lot of the newer Google services, happens to be limited to the US .

Transaction Log:

Log Format:

Cryptogram Information Data (1 byte)

Amount, Authorised (Numeric) (6 bytes)

Transaction Currency Code (2 bytes)

Transaction Date (3 bytes)

Application Transaction Counter (ATC) (2 bytes)

This was fun, but it doesn't really show much besides the fact that Google Wallet's virtual card(s) comply with the EMV specifications. What is more interesting is that the controller applet APDU commands that toggle contactless payment and modify the PPSE don't require additional application authentication and can be issued by any app that is whitelisted to use the secure element. The controller applet most probably doesn't store any really sensitive information, but while it allows its state to be modified by third party applications, we are unlikely to see any other app besides Google Wallet whitelsited on production devices. Unless of course more fine-grained SE access control is implemented in Android.

Fine-grained SE access control

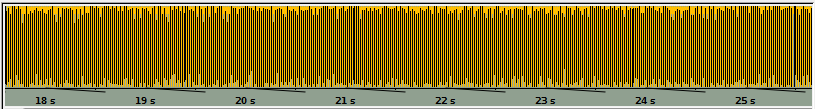

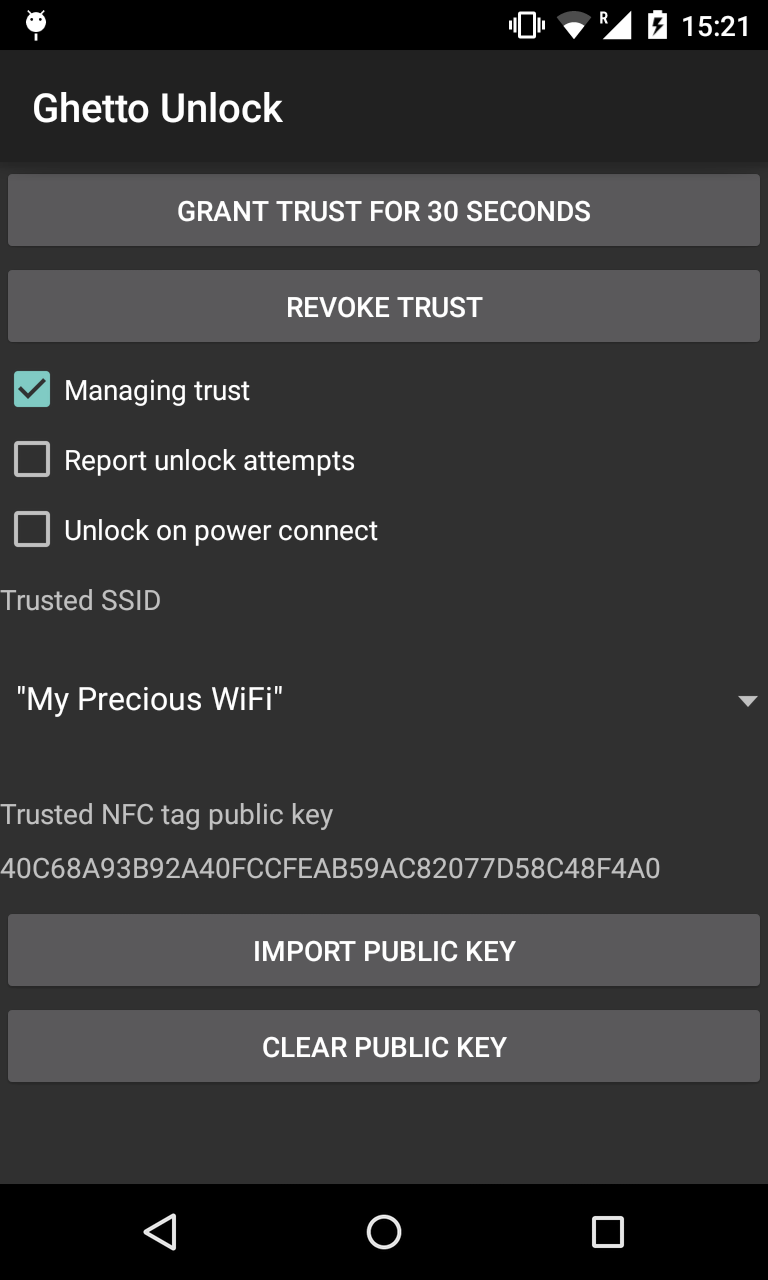

This fact that Google Wallet state can be modified by third party apps (granted access to the SE, of course) leads us to another major complication with SE access on mobile devices. While the data on the SE is securely stored and access is controlled by the applets that host it, once an app is allowed access, it can easily perform a denial of service attack against the SE or specific SE applications. Attacks can range from locking the whole SE by repeatedly executing failed authentication attempts until the Card Manager is blocked (a GP-compliant card goes into the TERMINATED state usually after 10 unsuccessful tries), to application-specific attacks such as blocking a cardholder verification PIN or otherwise changing a third party applet state. Another more sophisticated, but harder to achieve and possible only on connected devices, attack is a relay attack. In this attack, the phone's Internet connection is used to receive and execute commands sent by another remote phone, enabling the remote device to emulate the SE of the target device without physical proximity. The way to mitigate those attacks is to exercise finer control on what apps that access the SE can do by mandating that they can only select specific applets or only send a pre-approved list of APDUs. This is supported by JSR-177 Security and Trust Servcies API which only allows connection to one specific applet and only grants those to applications with trusted signature (currently implemented in BlackBerry 7 API). JSR-177 also provides the ability to restrict APDUs by matching them against an APDU mask to determine whether they should be allowed or not. SEEK for Android goes on step further than BlackBerry by supporting fine-grained access control with access policy stored on the SE. The actual format of ACL rules and protocols for managing them are defined in GlobalPlatform Secure Element Access Control standard, which is relatively new (v.1.0 released on May 2012). As we have seen, the current (4.0 and 4.1) stock Android versions do restrict access to the SE to trusted applications by whitlisting their certificates (a hash of those would have probably sufficed) in/etc/nfcee_access.xml, but once an app is granted access it can select any applet and send any APDU to the SE. If third party apps that use the SE are to be allowed in Android, more fine-grained control needs to be implemented by at least limiting the applets SE-whitelisted Android apps can select.Because for most applications the SE is used in conjunction with NFC, and SE app needs to be notified of relevant NFC events such as RF field detection or applet selection via the NFC interface. Disclosure of such events to malicious applications can also potentially lead to denial of service attacks, that is why access to them needs to be controlled as well. The GP SE access control specification allows rules for controlling access to NFC events to be managed along with applet access rules by saving them on the SE. In Android, global events are implemented by using broadcasts and interested applications can create and register a broadcast receiver component that will receive such broadcasts. Broadcast access can be controlled with standard Android signature-based permissions, but that has the disadvantage that only apps signed with the system certificate would be able to receive NFC events, effectively limiting SE apps to those created by the device manufacturer or MNO. Android 4.x therefore uses the same mechanism employed to control SE access -- whitelisting application certificates. Any application registered in

nfcee_access.xml can receive the broadcasts listed below. As you can see, besides RF field detection and applet selection, Android offers notifications for higher-level events such as EMV card removal or MIFARE sector access. By adding a broadcast receiver to our test application as shown below, we were able to receive AID_SELECTED and RF field-related broadcasts. AID_SELECTED carries an extra with the AID of the selected applet, which allows us to start a related activity when an applet we support is selected. APDU_RECEIVED is also interesting because it carriers an extra with the received APDU, but that doesn't seem to be sent, at least not in our tests.<receiver android:name="org.myapp.nfc.SEReceiver" >

<intent-filter>

<action android:name="com.android.nfc_extras.action.AID_SELECTED" />

<action android:name="com.android.nfc_extras.action.APDU_RECEIVED" />

<action android:name="com.android.nfc_extras.action.MIFARE_ACCESS_DETECTED" />

<action android:name="android.intent.action.MASTER_CLEAR_NOTIFICATION" />

<action android:name="com.android.nfc_extras.action.RF_FIELD_ON_DETECTED" />

<action android:name="com.android.nfc_extras.action.RF_FIELD_OFF_DETECTED" />

<action android:name="com.android.nfc_extras.action.EMV_CARD_REMOVAL" />

<action android:name="com.android.nfc.action.INTERNAL_TARGET_DESELECTED" />

</intent-filter>

</receiver>