In a previous post we looked at disk encryption enhancements introduced in Android 5.0. That article was written based on the Lollipop preview release, before the platform source code was available, and while the post got most of the details about hardware-backed key protection right (the official documentation has since been released), it appears that it was overly optimistic in expecting that high-end Lollipop devices will ship with hardware-accelerated disk encryption. Android 5.0 did come with disk encryption enabled by default (at least on Nexus devices), but FDE also brought some performance problems, and many Android enthusiasts rushed to disable it. While slower disk access generally doesn't affect perceived performance when using a particular app, longer load times can add up and result in slower switching between apps, as well as longer boot times. In order to improve performance without sacrificing device security Android 5.1 integrated support for hardware-accelerated disk encryption on devices that provide dedicated cryptographic hardware, such as the Nexus 6. Unfortunately, this feature ended up disabled in the current Android release, but hopefully will be turned back on in a future release.

This post will look into the implementation of hardware-backed disk encryption on the Nexus 6, show how it improves performance, and finally describe some of the problems of the current implementation.

Pretty much all SoC's used in current Android devices come with some sort of AES-capable hardware, usually in order to implement efficient DRM. OMAP devices provide

Introducing

This code first requests an asynchronous implementation of the

Integrating

This post will look into the implementation of hardware-backed disk encryption on the Nexus 6, show how it improves performance, and finally describe some of the problems of the current implementation.

Kernel crypto

As previously discussed, Android's FDE implementation is based on the

dm-crypt device-mapper target. As such, it performs cryptographic operations via the interfaces provided by the Linux kernel crypto API. The kernel crypto API defines a standard, extensible interface to ciphers and other data transformations implemented in the kernel (or as loadable modules). The API supports symmetric ciphers, AEAD ciphers, message digests and random number generators, collectively referred to as 'transformations'. All transformations have a name and a priority, as well as additional properties that describe their block size, supported key sizes, and so on. For example, a desktop Linux system you may support the following:$ cat /proc/crypto

...

name : aes

driver : aes-generic

module : kernel

priority : 100

refcnt : 1

selftest : passed

type : cipher

blocksize : 16

min keysize : 16

max keysize : 32

...

name : aes

driver : aes-aesni

module : kernel

priority : 300

refcnt : 1

selftest : passed

type : cipher

blocksize : 16

min keysize : 16

max keysize : 32

name : aes

driver : aes-asm

module : kernel

priority : 200

refcnt : 1

selftest : passed

type : cipher

blocksize : 16

min keysize : 16

max keysize : 32

...

Here we see three different implementations of the

The API provides single-block ciphers and hashes, which can be combined in order to provide higher-level cryptographic constructs via 'templates'. For example, AES in CBC mode is specified with the cbc(aes) template. Templates can be nested in order to request composite transformations that include more than one cryptographic primitive.

The API defines synchronous and asynchronous versions of cryptographic operations. Asynchronous operations return immediately and deliver their result via a callback, while synchronous operations block until the result is available. The crypto API also provides a user space interface via a dedicated socket type,

aes transformation, all built into the kernel, but with different priorities. When creating an instance of a particular transformation clients of the crypto API only specify its name and the kernel automatically returns the one with the highest priority. In this particular example, the aes-aesni implementation (which takes advantage of the AES-NI instruction set available on recent x86 CPUs) will be returned. New implementations can be added using the crypto_register_alg() and crypto_register_algs() functions. The API provides single-block ciphers and hashes, which can be combined in order to provide higher-level cryptographic constructs via 'templates'. For example, AES in CBC mode is specified with the cbc(aes) template. Templates can be nested in order to request composite transformations that include more than one cryptographic primitive.

The API defines synchronous and asynchronous versions of cryptographic operations. Asynchronous operations return immediately and deliver their result via a callback, while synchronous operations block until the result is available. The crypto API also provides a user space interface via a dedicated socket type,

AF_ALG.Accelerating dm-crypt

dm-crypt parses the cipher specification (aes-cbc-essiv:sha256 in stock Android) passed as part of its mapping table and instantiates the corresponding transforms via the kernel crypto API. Thus in order to have dm-crypt use hardware acceleration, one has to either register a hardware-backed AES implementation with a high priority (which may affect other kernel services), or use a unique AES transformation name and change the mapping table accordingly.Pretty much all SoC's used in current Android devices come with some sort of AES-capable hardware, usually in order to implement efficient DRM. OMAP devices provide

ecb(aes), cbc(aes), and ctr(aes) implementations (in omap-aes.c) backed by the OMAP Crypto Engine; Tegra devices provide ecb(aes), cbc(aes), and ofb(aes) (in tegra-aes.c) backed by NVIDIA's bitstream engine. ARMv8 devices offer an AES implementation which takes advantage of the the dedicated aese, aesd, and aesmc instructions of the CPU. If the hardware-backed AES transformations available on these devices have higher priority than the corresponding software implementations, dm-crypt will automatically use them and take advantage of any acceleration (offloading to dedicated hardware/co-processor) they provide.Qualcomm crypto engine

Recent (and probably older, too) Qualcomm Snapdragon SoC include a dedicated cryptographic module which provides hardware acceleration for commonly used algorithms such as AES and SHA-256. While publicly released details are quite scarce, the Snapdragon 805 and 810 SoC's have been FIPS 140-2 certified and certification documents offer some insight into the implementation and supported features.

The cryptographic hardware in the 805 is officially called the 'Crypto 5 Core' and provides hardware implementations of DES, 3DES and AES in various modes (ECB, CBC, etc.), authenticated encryption (AEAD), SHA-1 and SHA-256, HMAC, a hardware-seeded random number generator, as well as support for mobile communication algorithms like Kasumi and snow-3G.

The services provided by the crypto core are integrated into the Linux kernel in the form of several drivers:

![]()

The

As you can see some of them are registered with a generic transformation name (e.g.,

The Linux kernel crypto API does support XTS, so technically

qce50 (QTI crypto engine), qcrypto (kernel crypto API driver), and qcedev (for user-space applications). qcrypto and qcedev both depend on qce50, but provide different interfaces. The actual crypto hardware can be accessed either only from user space or kernel space at the same time, therefore the documentation recommends that only one of the interfaces be enabled. Here's the driver structure diagram from the kernel documentation:

The

qcrypto driver registers the following transformations with the kernel crypto API:$ grep -B1 -A2 qcrypto /proc/crypto|grep -v kernel

name : rfc4309(ccm(aes))

driver : qcrypto-rfc4309-aes-ccm

priority : 300

--

name : ccm(aes)

driver : qcrypto-aes-ccm

priority : 300

--

name : hmac(sha256)

driver : qcrypto-hmac-sha256

priority : 300

--

name : hmac(sha1)

driver : qcrypto-hmac-sha1

priority : 300

--

name : authenc(hmac(sha1),cbc(des3_ede))

driver : qcrypto-aead-hmac-sha1-cbc-3des

priority : 300

--

name : authenc(hmac(sha1),cbc(des))

driver : qcrypto-aead-hmac-sha1-cbc-des

priority : 300

--

name : authenc(hmac(sha1),cbc(aes))

driver : qcrypto-aead-hmac-sha1-cbc-aes

priority : 300

--

name : qcom-sha256

driver : qcrypto-sha256

priority : 300

--

name : qcom-sha1

driver : qcrypto-sha1

priority : 300

--

name : qcom-xts(aes)

driver : qcrypto-xts-aes

priority : 300

--

name : qcom-cbc(des3_ede)

driver : qcrypto-cbc-3des

priority : 300

--

name : qcom-ecb(des3_ede)

driver : qcrypto-ecb-3des

priority : 300

--

name : qcom-cbc(des)

driver : qcrypto-cbc-des

priority : 300

--

name : qcom-ecb(des)

driver : qcrypto-ecb-des

priority : 300

--

name : qcom-ctr(aes)

driver : qcrypto-ctr-aes

priority : 300

--

name : qcom-cbc(aes)

driver : qcrypto-cbc-aes

priority : 300

--

name : qcom-ecb(aes)

driver : qcrypto-ecb-aes

priority : 300

As you can see some of them are registered with a generic transformation name (e.g.,

hmac(sha1)), while some have the qcom- prefix. Whether to use the generic or driver-specific name is controlled by the device tree configuration. The interesting algorithm in the list above is qcom-xts(aes). Unlike CBC and CTR, the XTS cipher mode is not a generic chaining mode, but has been specifically developed for the purposes of block-based disk encryption. XTS works on wide blocks which map nicely to disk sectors (or blocks) and efficiently generates a 'tweak' key, different for each encrypted block by using the sector number and offset into the sector as variable inputs. Compared to AES-CBC-ESSIV XTS is more complex to implement, but less malleable (even though it is not an authenticated cipher), and is thus preferable.The Linux kernel crypto API does support XTS, so technically

dm-crypt could take advantage of the hardware-backed AES-XTS implementation in the Qualcomm CE without modifications. However, dm-crypt is designed to operate on 512-byte sectors and if used as is with the Qualcomm CE would result in many small requests to the cryptographic hardware, which is rather inefficient. Instead of trying to modify or tune dm-crypt, Qualcomm added a new device-mapper target for use with its SoC's: dm-req-crypt.Introducing dm-req-crypt

dm-req-crypt works with encryption requests of up to 512KB and sends asynchronous encryption/decryption requests to the Snapdragon cryptographic module via the kernel crypto API interface, implemented by the qcrypto driver. Without going into the intricacies of kernel programming, here are the most important calls it uses to encrypt disk blocks: ...

tfm = crypto_alloc_ablkcipher("qcom-xts(aes)", 0, 0);

req = ablkcipher_request_alloc(tfm, GFP_KERNEL);

ablkcipher_request_set_callback(req, CRYPTO_TFM_REQ_MAY_BACKLOG,

req_crypt_cipher_complete, &result);

crypto_ablkcipher_clear_flags(tfm, ~0);

crypto_ablkcipher_setkey(tfm, NULL, KEY_SIZE_XTS);

memset(IV, 0, AES_XTS_IV_LEN);

memcpy(IV, &clone->__sector, sizeof(sector_t));

ablkcipher_request_set_crypt(req, req_sg_in, req_sg_out,

total_bytes_in_req, (void *) IV);

rc = crypto_ablkcipher_encrypt(req);

...

This code first requests an asynchronous implementation of the

qcom-xts(aes) transform, sets the encryption key, then allocates and sets up a request structure, and finally starts the encryption operation by calling the crypto_ablkcipher_encrypt() function. The important bit here is that the input and output buffers (scatterlists) req_sg_in and req_sg_out can hold up to 1024 sectors, whereas the dm-crypt always encrypts a single sector at a time. Another important detail is that encryption key passed to the AES-XTS transformation object via crypto_ablkcipher_setkey() is actually NULL. We'll address this later in our discussion of Android 5.1's FDE implementation.Integrating dm-req-crypt

As with

The disk encryption key passed to the kernel (also called 'master key') is generated, encrypted and stored exactly in the same way as with

dm-crypt, disk encryption and mounting is handled by the cryptfs module of the vold daemon. Because most of the heavy lifting is done by the device mapper kernel module, changing vold to support dm-req-crypt is fairly straightforward. The type of disk encryption stored in the crypto footer structure is changed to aes-xts, and the device mapper target used to create a DM device is changed from crypt (which maps to the dm-crypt driver) to req-crypt. These changes are triggered at build time by setting the CONFIG_HW_DISK_ENCRYPTION macro.The disk encryption key passed to the kernel (also called 'master key') is generated, encrypted and stored exactly in the same way as with

dm-crypt (see the diagram at the end of this post for details). When an encrypted device is booted, the PIN or password entered by the user is run through scrypt, then signed with a hardware-bound RSA key, the result is run through scrypt again to derive the key encryption key (KEK) and IV, which are in turn used to decrypt the master key stored in the crypto footer. The master key is then passed to the device mapper driver as part of the mapping table via an ioctl() call. However, the dm-req-crypt implementation completely ignores the passed cipher string, encryption key and IV offset, and only uses the device path and start sector parameters. As we saw in the previous section, the key passed to the kernel crypto API is also NULL, so where does the actual disk encryption key come from?Key management

The key management implementation is unfortunately proprietary and depends on the Qualcomm Secure Execution Environment (QSEE, also used to implemented the hardware-backed keystore). That said, the glue code that integrates it with

Generating a disk encryption key causes the

vold, as well as the kernel driver are open source, so we can get a fairly good idea of how the system works. The disk encryption key is set, updated and cleared using the cryptfs_hw glue library. This library merely loads several functions from the proprietary libQSEEComAPI.so library using dlopen() and provides wrappers around them. For example, the disk encryption key is set by calling set_hw_device_encryption_key(), which in turn calls QSEECom_update_key_user_info() from the proprietary library. This function send commands to the secure OS via the qseecom kernel driver which is visible to user space as the /dev/qseecom device.Generating a disk encryption key causes the

qseecom driver to request loading a trusted app in the secure OS, and then sends the QSEOS_GENERATE_KEY command, which kicks off key generation. Generated keys a appear to be stored on the ssd ('secure storage device'?) partition which points to /dev/block/mmcblk0p34 on the Nexus 6. After the key is generated, it is loaded into the hardware crypto engine using the QSEOS_SET_KEY command and can henceforth be used for encryption or decryption.Using HW-accelerated FDE

As discussed in the 'Integrating

dm-req-crypt' section, two things are needed to enable hardware-accelerated disk encryption: a vold binary with dm-req-crypt support and the libcryptfs_hw library. And, of course, all of the proprietary bits and pieces that make up the QSEE need to be in place. Thus it is easier to start with a stock 5.1 image, rather than build one from AOSP, because some of the required proprietary binaries seem to be missing from the officially released tarballs. Once everything is in place, encryption works exactly as before: if the fstab.shamu file includes the forceencrypt flag, the device will be encrypted on first boot, otherwise you need to kick off encryption from Settings->Security->Encrypt phone. One thing to note is that there is no way to transition a dm-crypt encrypted partition to dm-req-crypt, so if the device is already encrypted, you need to wipe the userdata partition first. After the encryption completes, the crypto footer (in the metadata partition on the N6) will look like this:Android FDE crypto footer

-------------------------

Magic : 0xD0B5B1C4

Major Version : 1

Minor Version : 3

Footer Size : 2320 bytes

Flags : 0x00000004

Key Size : 128 bits

Failed Decrypts : 0

Crypto Type : aes-xts

Encrypted Key : CC43B0AE14BF27BAFE4709A102A96140

Salt : 1BB69D5DE1132F15D024E65370C29F33

KDF : scrypt+keymaster

N_factor : 15 (N=32768)

r_factor : 3 (r=8)

p_factor : 1 (p=2)

crypt type : PIN

FS size : 55615232

encrypted upto : 0

hash first block : 000000000000000000000000000000...

scrypted IK : 8B6DDC19F047331740B31B0F41E4EC5F...

The important bit here is the crypto type which is set to

aes-xts. Because the actual disk encryption key is manged by the crypto engine, all other parameters (encrypted key, salt, etc.) are only used when verifying the user PIN or password. On boot, vold checks the value of the crypto type, and if set to aes-xts, loads the disk encryption key using the cryptfs_hw library, and then initializes the dm-req-crypt device mapper target. From there, the system simply mounts the created dm-0 device as /data, and all reads and writes are decrypted/encrypted transparently.Performance

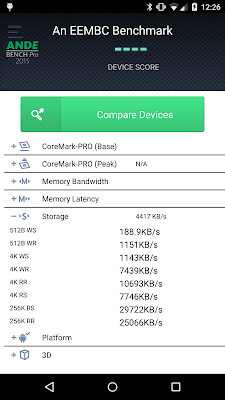

As can be expected, hardware-backed disk encryption performs better than software-based

As you can see, while disk access when using hardware-backed disk encryption is still about 40% slower than on an unencrypted device, random and sequential reads are almost two times faster compared to the software implementation (when reading 256KB blocks of data: 46.3MB/s vs. 25.1MB/s). So why isn't hardware-backed FDE enabled on current Nexus 6 builds?

dm-crypt implementation. The screenshots below show the actual numbers, as measured by the AndEBenchPro application ('low-tech'dd read/write results are similar).| No FDE | Software FDE | Hardware FDE |

As you can see, while disk access when using hardware-backed disk encryption is still about 40% slower than on an unencrypted device, random and sequential reads are almost two times faster compared to the software implementation (when reading 256KB blocks of data: 46.3MB/s vs. 25.1MB/s). So why isn't hardware-backed FDE enabled on current Nexus 6 builds?

Stability problems

Unfortunately, while the current implementation performs pretty well, there are still some problems, especially when the device is in sleep mode. If the devices is in sleep mode for a relatively long period of time, read errors can occur, and the

userdata partition may be mounted as read only (which wreaks havoc with the system's content providers); the device may even power off. While a reboot seems to fix the issue, if the the userdata was mounted read-only, the SQLite databases storing system configuration and accounts may get corrupted, which in some cases can only be fixed by a factory reset. Thus, hardware-accelerated disk encryption is unfortunately currently not quite suitable for daily use on the Nexus 6.The OnePlus One (which has a Snapdragon 801 SoC), running CyanogenOS 12 also includes a

dm-req-crypt-based FDE implementation which is enabled out of the box (disk encryption has to be triggered manually though). The FDE implementation one the OnePlus One seems to be quite stable, with comparable performance (50MB/s random read), so hopefully the problem on the Nexus 6 is a software one and can be resolved with a kernel update.Summary

Disk encryption on Android can be accelerated by adding a kernel crypto API driver which takes advantage of the SoC's cryptographic hardware. This allows block encryption to be offloaded from the main CPU(s), and improves disk access times. Devices based on recent Qualcomm Snapdragon SoC's such as the Nexus 6 and the OnePlus One can take advantage of the SoC's crypto core module using the

qcedev and qcrypto kernel drivers. A dedicated disk encryption device mapper target, dm-req-crypt, which batches encryption requests in order to increase throughput is also supported. Additionally, disk encryption keys are managed through a TEE secure app, and thus are not accessible by the Android OS, including the kernel. When using hardware-accelerated FDE disk access is almost two times faster compared to the software-based dm-crypt implementation, but unfortunately there are some major stability problems on the Nexus 6. Hopefully those will be fixed in the next Android release, and hardware-accelerated disk encryption will be enabled out of the box.