In the previous two posts we looked at the internal implementation of the Android credential storage, and how it is linked to the new

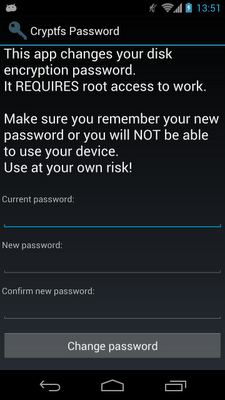

Storing user credentials such as passwords and private keys securely is of course essential, but why should we care about the trust store? As the name implies, the trust store determines who we trust when connecting to Internet servers or validating signed messages. While credentials are usually used proactively only when we authenticate to a particular service, the trust store is used every time we connect to a secure server. For example, each time you check GMail, Android connects to Google's severs using SSL and validates their certificates based on the device's trust store. Most users are unaware of this, unless some error occurs. Since the trust store is used practically all the time, and usually in the background, one could argue that it's even more important then credential storage. Up till Android 4.0, the OS trust store was hard wired into the firmware, and users had no control over it whatsoever. Certificates bundled in the store were chosen solely by the device manufacturer or carrier. The only way to make changes was to root your device, re-package the trusted certificates file and replace the original one (instructions from cacert.orghere). That is obviously not too practical, and a major obstacle to using Android in enterprise PKI's. In the wake of major CA's being compromised practically each month this year, tools that make changing the default trusted certificates in place have been developed, but using them still requires a rooted phone. Fortunately, ICS has made managing the trust store much more flexible, and gives the much needed control over who to trust to the user. Let's see what has changed.

Pre-ICS, the trust store was a single file:

Each file contains one CA certificate. The file names may look familiar: they are hashes of the CA subject names, as used in mod_ssl and other cryptographic software implemented using OpenSSL. This makes it easy to quickly find certificates without scanning the entire store. Also note the permissions of the directories:

If you examine the output of this code, you would notice that certificate aliases start with either the

Works pretty well, but there is one major problem with this code: it does not check revocation. Android's default

Of course, system applications such as the browser, email and VPN clients are also taking advantage of the new trust store, so connecting to a corporate Exchange server or a secure Web application should be as easy as installing the appropriate certificates. We'll see how well that works out in practice once I get a real ICS device (shouldn't be too long now...).

That concludes our discussion of the new credential and trust stores introduced in Android 4.0. To sum things up: users can now freely install and remove private keys and trusted certificates, as well as disable pre-installed CA certificates via the Settings app. Third-party applications can also do this via the new

KeyChain API introduced in ICS. As briefly mentioned in the second post, there is also a new TrustedCertificateStore class that manages user installed CA certificates. In this entry we will examine how the new trust store is implemented and how it is integrated in the framework and system applications.Storing user credentials such as passwords and private keys securely is of course essential, but why should we care about the trust store? As the name implies, the trust store determines who we trust when connecting to Internet servers or validating signed messages. While credentials are usually used proactively only when we authenticate to a particular service, the trust store is used every time we connect to a secure server. For example, each time you check GMail, Android connects to Google's severs using SSL and validates their certificates based on the device's trust store. Most users are unaware of this, unless some error occurs. Since the trust store is used practically all the time, and usually in the background, one could argue that it's even more important then credential storage. Up till Android 4.0, the OS trust store was hard wired into the firmware, and users had no control over it whatsoever. Certificates bundled in the store were chosen solely by the device manufacturer or carrier. The only way to make changes was to root your device, re-package the trusted certificates file and replace the original one (instructions from cacert.orghere). That is obviously not too practical, and a major obstacle to using Android in enterprise PKI's. In the wake of major CA's being compromised practically each month this year, tools that make changing the default trusted certificates in place have been developed, but using them still requires a rooted phone. Fortunately, ICS has made managing the trust store much more flexible, and gives the much needed control over who to trust to the user. Let's see what has changed.

Pre-ICS, the trust store was a single file:

/system/etc/security/cacerts.bks, a Bouncy Castle (one of the JCE cryptographic providers used in Android) native keystore file. It contains all the CA certificates Android trusts and is used both by system apps such as the email client and browser, and applications developed using the SDK. Since it resides on the read-only system partition, it cannot be changed even by system-level applications. The newly introduced in ICS TrustedCertificateStore class still reads system trusted certificates from /system/etc/security, but adds two new, mutable locations to store CA certificates in /data/misc/keychain: the cacerts-added and cacerts-removed directories. Let's see what's inside:ls -l /data/misc/keychain

drwxr-xr-x system system 2011-11-30 12:56 cacerts-added

drwxr-xr-x system system 2011-12-02 15:21 cacerts-removed

# ls -l /data/misc/keychain/cacerts-added

ls -l /data/misc/keychain/cacerts-added

-rw-r--r-- system system 653 2011-11-29 18:34 30ef493b.0

-rw-r--r-- system system 815 2011-11-30 12:56 9a8df086.0

# ls -l /data/misc/keychain/cacerts-removed

ls -l /data/misc/keychain/cacerts-removed

-rw-r--r-- system system 1060 2011-12-02 15:21 00673b5b.0

Each file contains one CA certificate. The file names may look familiar: they are hashes of the CA subject names, as used in mod_ssl and other cryptographic software implemented using OpenSSL. This makes it easy to quickly find certificates without scanning the entire store. Also note the permissions of the directories:

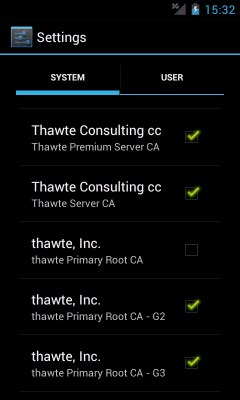

0775 system system guarantees that only the system user is able to add or remove certificates, but anyone can read them. As can be expected, adding trusted CA certificates is implemented by storing the certificate in cacerts-added under the appropriate file name. The two files above, 30ef493b.0 and 9a8df086.0, correspond to the certificates displayed in the 'User' tab of the Trusted credential system application (Settings->Security->Trusted credentials). But how are OS-trusted certificates disabled? Since pre-installed CA certificates are still stored in /system/etc/security (read-only), a CA is marked as not trusted by placing a copy of its certificate in cacerts-removed. Re-enabling is performed by simply removing the file. In this particular case, 00673b5b.0 is the thwate Primary Root CA, shown as disabled in the 'System' tab:TrustedCertificateStore is not available in the SDK, but it has a wrapper accessible via the standard JCE KeyStore API, TrustedCertificateKeyStoreSpi, that applications can use. Here's how we can use it to get the current list of trusted certificates::KeyStore ks = KeyStore.getInstance("AndroidCAStore");

ks.load(null, null);

Enumeration aliases = ks.aliases();

while (aliases.hasMoreElements()) {

String alias = aliases.nextElement();

X09Certificate cert = (X509Certificate)

ks.getCertificate(alias);

Log.d(TAG, "Subject DN: " +

cert.getSubjectDN().getName());

Log.d(TAG, "Issuer DN: " +

cert.getIssuerDN().getName());

}

If you examine the output of this code, you would notice that certificate aliases start with either the

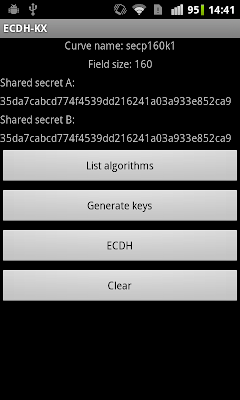

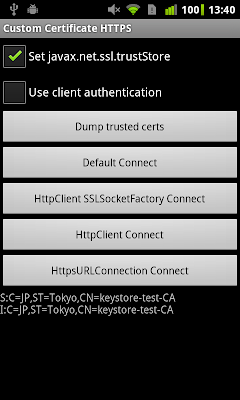

user: (for user installed certificates) or system: (for pre-installed ones) prefix, followed by the subject's hash value. This lets us easily access the OS's trusted certificates, but a real word application would be more interested in whether it should trust a particular server certificate, not what the current trust anchors are. ICS makes this very easy by integrating the TrustedCertificateKeyStoreSpi with Android's JSSE (secure sockets) implementation. The default TrustManagerFactory uses it to get a list of trust anchors, thus automatically validating server certificates against the system's currently trusted certificates. Higher-level code that uses HttpsURLConnection or HttpClient (both built on top of JSSE) should thus just work without needing to worry about creating and initializing a custom SSLSocketFactory. Here's how we can use the TrustManager to validate a certificate issued by a private CA (the CA certificate is already installed in the user trust store).X509Certificate[] chain = KeyChain.getCertificateChain(ctx,

"keystore-test-ee");

Log.d(TAG, "chain length: " + chain.length);

for (X509Certificate x : chain) {

Log.d(TAG, "Subject DN: "

+ x.getSubjectDN().getName());

Log.d(TAG, "Issuer DN: "

+ x.getIssuerDN().getName());

}

TrustManagerFactory tmf = TrustManagerFactory.getInstance("X509");

tmf.init((KeyStore) null);

TrustManager[] tms = tmf.getTrustManagers();

X509TrustManager xtm = (X509TrustManager) tms[0];

Log.d(TAG, "checking chain with " + xtm);

xtm.checkClientTrusted(chain, "RSA");

Log.d(TAG, "chain is valid");

Works pretty well, but there is one major problem with this code: it does not check revocation. Android's default

TrustManager explicitly turns off revocation when validating the certificate chain. So even if the certificate had a valid CDP (CRL distribution point) extension, pointing to a valid CRL, and the certificate was actually revoked, it would still validate fine in Android. What's missing here is the ability to dynamically fetch, cache and update revocation information as needed, based on information available in certificate extensions. Hopefully future version of Android will add this functionality to make Android's PKI support complete.Of course, system applications such as the browser, email and VPN clients are also taking advantage of the new trust store, so connecting to a corporate Exchange server or a secure Web application should be as easy as installing the appropriate certificates. We'll see how well that works out in practice once I get a real ICS device (shouldn't be too long now...).

That concludes our discussion of the new credential and trust stores introduced in Android 4.0. To sum things up: users can now freely install and remove private keys and trusted certificates, as well as disable pre-installed CA certificates via the Settings app. Third-party applications can also do this via the new

KeyChain API, if the user grants the app the necessary permissions. The key and trust stores are fully integrated into the OS, so using standard secure communication and cryptographic Java API's should just work, without the need for applications-specific key stores. A key element required for full PKI support -- revocation checking, is still missing, but the key and trust store functionality added in ICS is a huge step in making Android more secure, flexible and enterprise-friendly.